Data protection in the US has historically been like the wild west. That’s according to Nanda Kumar, a professor at Baruch College’s Zicklin School of Business and expert in technology policy, business analytics, and information privacy issues.

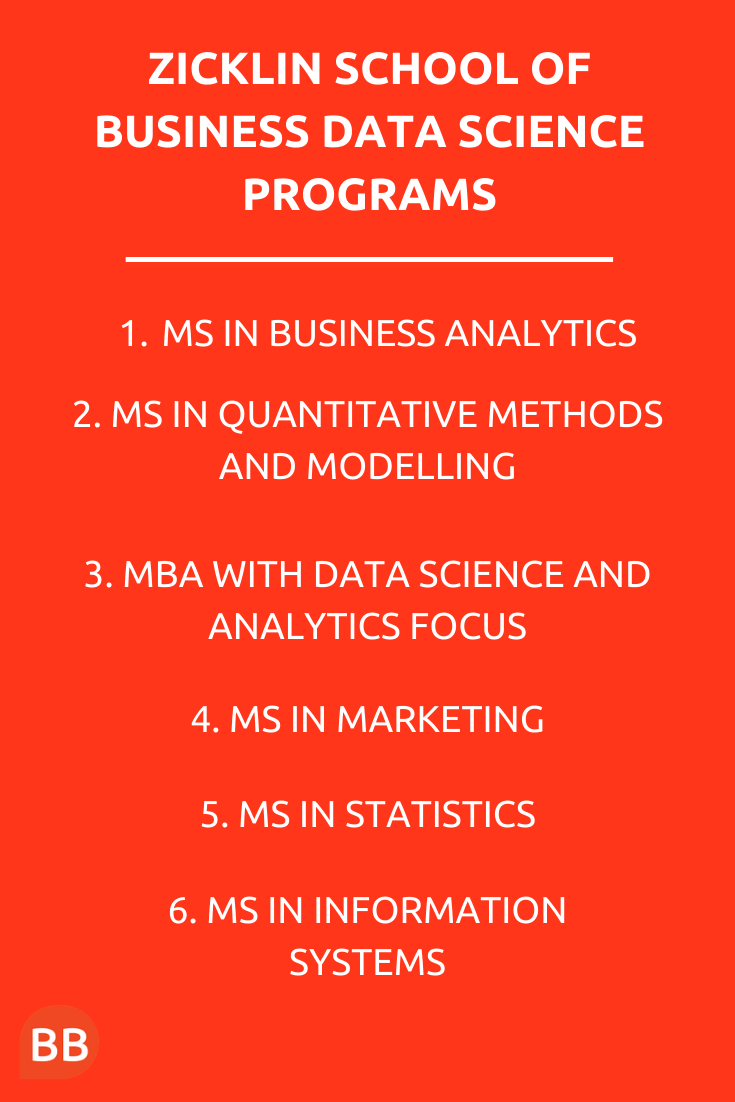

Managing big data responsibly is imperative for data science professionals graduating from the Zicklin School of Business. The school runs six data science programs with a thread of data ethics running through each.

But what do Zicklin’s data science students learn? What do they need to know about data protection in the US?

Data protection in the US has historically been like the wild west

The US doesn’t have a central, Federal privacy law in the way the EU now has General Data Protection Regulation (GDPR) that protects citizens’ personal data. Talking specifically about consumer data in the US there is no effective protection for users at the Federal level.

The Privacy Act of 1974 is limited to data collected by the US government from its citizens. It doesn’t cover private industry or data collected by companies on the internet. Though there is a patchwork of laws in place to protect data in specific industries and use-cases in finance, healthcare and education, Nandaexplains the approach is still quite relaxed.

“My intuition is that historically the US has been more averse to regulation,” he says. “The US has had a more laissez faire attitude to companies collecting data. Historically the labor market in the US is different, there’s more risk taking, more innovation, but the flip side is that there’s less regulation and less stringency on data privacy.”

There’s a constellation of issues in the US around ethics and data protection, adds Marios Koufaris, professor and chair of the Paul H. Chook Department of Information Systems and Statistics at Zicklin.

It’s not just about the privacy of existing data, but the issue extends to transparency and communication with the data owners, the consumers. There are also issues around auditing algorithms that process data, and tackling issues with artificial intelligence and bias and discrimination.

Though the relevant data protection is lacking at Federal level in the US, there are a few states that have enacted their own legislation. California passed the Californian Consumer Privacy Act (CCPA) in January 2020, which slightly resembles GDPR.

New York, Maryland, Massachusetts, Hawaii, and North Dakota also have data protection legislation pending. The legislation aims to give consumers the right to access and delete their data, as well as fine companies that don’t adhere.

Not everyone is concerned about data privacy

Marios (pictured above) explains that from the consumer perspective in the US attitudes have been relaxed when it comes to data privacy.

“American users have been willing to look the other way when their data has been used or they’re receiving ads based on their own private information,” he says, “with the expectation that they’re getting something in return which has traditionally been free services.”

Nanda’s view is that the exponential growth of free content has led to the issue now where a lack of concern about our data could mean we miss out on the chance to provide more control to users on their data including the option for them to monetize it better than existing systems.

Different people will care differently about how their information is being collected and used, which is fine. But up until now it’s been mostly low stakes. That is changing.

“Individuals are used to getting free content but in return are giving something up and they don’t realize how valuable it could be,” he says.

There have recently been more high-profile cases of data misuse—the Cambridge Analytica scandal for example—that have heightened people’s awareness of the issues at hand.

Concerns are rising over facial recognition technology

The same issues we have with online consumer data is taken up a notch when facial recognition is involved. Concerns are rising in the US.

“In the past I’ve interviewed some students to see what they think about privacy, and how Facebook collects and monetizes their data,” explains Nanda. “Typically, it’s not such big a deal, but most of these folks start to care more when the issue is about facial recognition.”

Nanda’s students are becoming more aware of how their data is being collected and used. And the facial recognition debate is becoming a debate about civil liberties and freedom. “I do think that increased awareness about data aggregation, coupled with control issues around facial recognition, is forcing consumers and lawmakers to take privacy violations more seriously,” he says.

The ability to instantly pull up information about you is a concern, especially when that information has been gleaned from social media profiles without consent. Clearview AI—a facial recognition software company—is currently facing lawsuits for scraping photos of people from LinkedIn and Instagram and selling the resulting database to law enforcement agencies in the US.

Facial recognition also has a race issue. Research has shown that facial recognition algorithms misclassified Black women by up to 34.7%. Other algorithms were also shown to perform worse on Black, Asian, and Native American faces.

Nanda is adamant that if we don’t take the necessary action to identify these critical issues and regulate them in a nuanced way, the trade-offs between users’ privacy or innovation around data products could become harder to manage.

How Zicklin School of Business is training students to manage data responsibly

The next generation of data science professionals will need expert competence in matters relating to data protection and privacy.

At the Zicklin School of Business the approach is broad. Discussion of the issues surrounding data privacy and transparency are embedded across the curriculum. Students learn through the application of data science technology and picking through the data themselves.

“When we have a course on data mining, the course doesn’t just teach students how to use certain tools or how to learn certain methods. It starts from a business problem and goes through the analysis of the problem with students learning the tools and the skills,” explains Marios.

Within the analysis of the business problem issues of data privacy and the ethical use of data arise. It’s tantamount to their future career success that data science students at Zicklin learn to use these tools in the context of real-life business cases.

“Ethical awareness is one of the main learning goals of pretty much all of our programs at Zicklin,” Marios contends. “This means that the majority of our courses have to have components that in some way address ethical issues and raise awareness of them. This is something we’re always taking into account.”

Read more about the Zicklin School of Business:

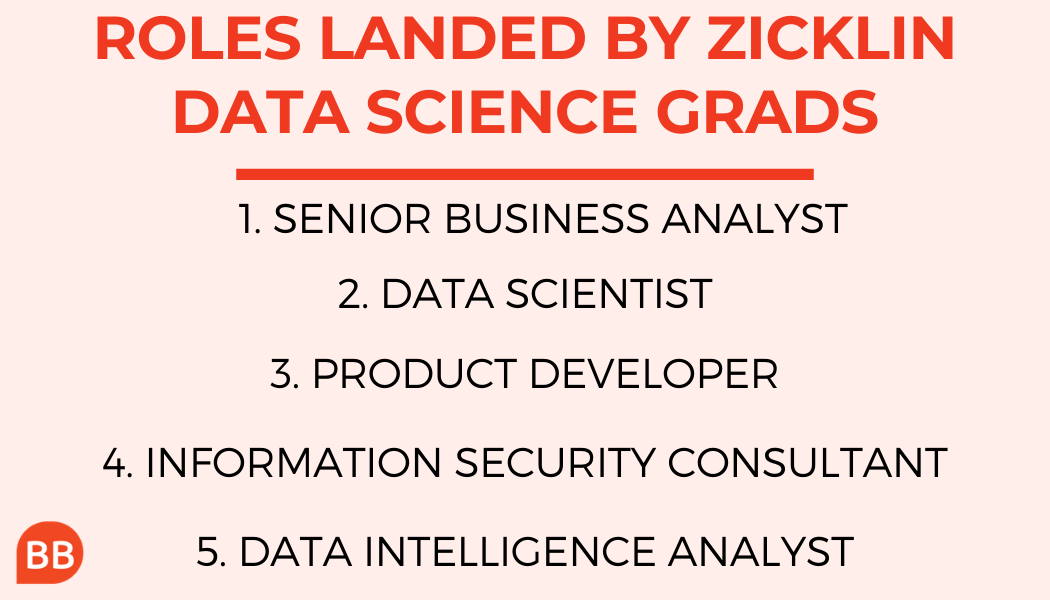

5 Of The Best Data Science Jobs You Can Get After Business School